In a perfect world, we don’t have to worry about errors. That’s not this world, however. Here, handling errors can easily be half the effort of doing things.

We’ll look at a basic test structure, several ways to report errors, and options for when we want to handle errors.

Basic Test Structure

When you’re driving a method into existence, you call it with zero or more successful return modes, and zero or more failure modes (in separate tests:). (People tend to remember to drive out the successful modes more often than the unsuccessful ones.)

If possible, create at least with one scenario with no errors to report. That ensures that the method doesn’t always return an error.

Then, for each error scenario, write a test like this:

- Set up the error scenario

- Call the method being tested

- Check that the proper error was triggered. (This includes things like checking an exception’s error message or exception chain, if you care about those.)

Code That Reports Exceptional Conditions

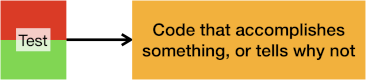

We often want code to either accomplish something, or report what prevented it doing so. For example, if we’re searching a data structure for a value, we might use a nil return value or an exception to indicate that it was not found.

There are many ways to report errors or exceptional situations. TDD can be used to drive any of these. That doesn’t make them all equally good. You need to judge your circumstances, your programming language, and the consequences of each design option.

Error Reporting

What should code do to let other code know there’s an error? Here are several choices.

Set a Flag

Setting an error flag is probably the oldest and lowest-level solution. (Think of a CPU with an overflow flag.) The code sets a boolean value (on its own class or somewhere more global). Client code is responsible for checking the flag and figuring out what to do.

This can go beyond a single bit, of course – an object might have an arbitrary structure holding an error, or even a set of multiple errors.

The Test – Check that the flag is set properly.

Error Return (including nil)

Another approach is to have a return value that is (or includes) a status code. For example, read() will return the number of characters read, or a negative number if there was an error.

This approach was popular with C, and experience there revealed a common problem: people would forget or not bother to check the return value. If you ever wrote printf(“Hello world”), congratulations, you’re in that crowd with me and many others.

Some languages require you to explicitly ignore error codes, if that’s what you want to do.

We’ll include “returning nil” as an example of this: we’ve extended the domain with a special value.

The Test – Check that the return value is as expected.

Exception

Many languages have the notion of an exception – an indirect flow of control that unwinds the call stack. An exception travels up the call stack until some method takes responsibility for handling it.

The good side of this is that the “happy path” code is straightforward, not interrupted by constant error checks.

The Test – Catch the error and check that it’s as expected. Make sure that “nothing thrown” is detected as a test failure. Many test frameworks have an assertion designed for exceptions.

Notification

The mechanisms so far require the calling code to handle errors. We can decouple this using a notification approach. Code that wants to handle errors registers to receive notifications. When an error occurs, these listeners are notified, and they handle it.

Some languages have a built-in publish-subscribe mechanism. Lacking that, you can build a listener registration mechanism.

The Test – Register the test class as a listener to the notifying object; check that it was notified. (Note that you’ll also need to test-drive the handlers themselves.)

Callback

Some languages use a “callback” function to report an error (or occasionally to report success).

The Test – Check that the callback is called (with any arguments if expected). Something like this:

testCallbackReporting() {

bool wasCalled = false

// other setup too

methodUnderTest(parameters, { () => wasCalled = true;} )

assertTrue(wasCalled)

}

Code That Handles Errors

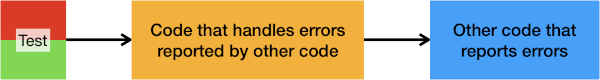

Until now, we’ve looked at code that should report an error, and tested that it does. But some code receives an error from another object, and must handle it properly.

This requires our test to set up two (or more) objects: the code that reports the errors, and the code that handles the errors.

However, there’s a complication. Lots of code that reports errors is complex, slow, or can’t easily trigger the error we want to see. (How will you convince your database layer to report that the database went down?)

One way to handle this is to not test with the “real” code, but rather set up the tested method to use a test double that reports the desired error, without the overhead of working with the real object.

In general, the test looks like this:

- Create a test double that will report the error we want to check

- Install the test double so that the code under test will interact with it rather than with the real code

- Call the method under test

- It calls the test double

- The test double reports the error

- The method under test handles the error

- The test verifies that the code under test handled the error. (If necessary, it examines the object under test or the test double).

Since you’re driving the handling code from the test, this pushes the code under test to be easily configured with the test double. If you’re working with legacy code, you may have to refactor to make this possible.

How Are Errors Handled?

The reported error (from the test double or the real code) will typically take one of the forms we described earlier: flag, error return, exception, notification, callback.

If your handling code is itself going to report the error, it need not use the same approach as the code that reported the error to it. For example, the test double may return a nil value, and the tested code may turn that into an exception.

If the error is passed along, you’ll need to check that any translation is correct.

There are a couple other alternatives to reporting an error: ignoring the error, or retrying.

Your handling may have other side effects; you’ll need to decide whether and how to test those. For example, the handling code may log an exception that it receives. In some systems, this is informal and not worth testing. In others, the log is a crucial legal requirement, and must be tested.

Your code under test may need to deallocate space, close connections, etc. when an error occurs. You’ll want to test those side effects, and may require privileged access to the object under test.

Ignore / Absorb the Error

In some cases, we want to ignore (absorb) an error.

The Test – Check that no error was reported.

Retry

In other cases, we want to try the reporting code again, hoping that the error goes away. (If it doesn’t work in a limited number of tries, we usually still report an error.)

The simplest version of this is that we have our call to the reporting code in a loop or sequence that tries several times. In more sophisticated versions, we might add in delays. Or we might have “failover” services that we try.

Your test double must be prepared to return the exception the number of times you want, before returning a legitimate value. It may need to count the number of times it was called (so the test can check).

The Test – You’ll almost certainly need multiple tests. You’ll need to test where:

- No error occurs on the first call

- An error occurs the first (or nth time), but not on the retry

- So many errors occur that the code gives up on retry, and itself reports an error

Don’t get so caught up in writing the “positive” code that accomplishes something that you forget to test the “negative” code that considers possible errors.

Conclusion

There are two broad categories of error-related code:

- Code that reports errors. We discussed how to test-drive various reporting styles: flag, error return, exception, notification, callback.

- Code that handles errors. (It calls code that reports errors, and can itself report errors.) We discussed using test doubles to create the errors to handle. We also looked at two more alternatives: ignore or retry.

Don’t get so caught up in writing the “positive” code that accomplishes something that you forget to test the “negative” code that considers possible errors.