T is for Testable in the INVEST model.

A testable story is one for which, given any inputs, we can agree on the expected system behavior and/or outputs.

Testability doesn’t require that all tests be defined up front before the first line of code is written. We just need to be appropriately confident that we could define and agree on them.

Testability doesn’t require that tests be automated. There are advantages to automation, but tests can be useful without it.

Testability doesn’t require that we treat tests as a full specification, where any missing piece brings down the whole edifice. Instead, we focus on interesting tests that clarify confusing or controversial decisions.

Testing, even using tests as a collaborative tool, is not a new thing. There are tools to help specify tests – xUnit, Cucumber, fit, or even plain English. But tools aren’t enough; there are still plenty of mistakes you can make.

We’ll look at challenges you face regardless of tool or technique:

- Magic

- Intentional Fuzziness

- Computational Infeasibility

- Non-Determinism

- Subjectivity

- Research Projects

Magic

Some tests rely on magic or intuition. Unfortunately, (withholding judgment about humans) computers don’t have either one.

A test sets up a context, has inputs and/or steps, and produces output and/or side effects.

Just because we can write inputs and outputs doesn’t mean we have a good test. The outputs must also be a function of the inputs; they can’t depend on other unstated inputs.

For example, we’d like to have a program to predict tomorrow’s stock price, given its previous prices.

We have lots of test cases – easily a century’s worth of examples for hundreds of stocks.

But, at its heart, this is a desire for magic, unless we believe something very unlikely: that the stock’s price is determined only by the history of its prices. Suppose 50% of the world’s oil refineries will go offline for 6 months. Do you really believe that news wouldn’t affect oil, airline, and automobile stocks?

Intentional Fuzziness

In some cases, algorithms exist but choices have to be made. Some fuzziness is accidental but some is intentional: Sales wants red, and Marketing wants green. Rather than make a choice that will annoy one side or the other, the test hides behind fuzziness like “the appropriate color”.

Computational Infeasibility

Some things are computable, perhaps even easy to specify, but aren’t feasible above a certain size. For example, finding the exact shortest path in a large map is very expensive.

You may be able to loosen your criteria and settle for an approximation. In the map, getting within a factor of 2 of the shortest path is substantially cheaper.

Non-Determinism

Some non-determinism is because of randomness or pseudo-randomness.

Other non-determinism happens when there is a range or set of acceptable answers.

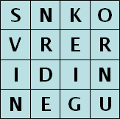

For example, I want a puzzle-making program that, given a list of words, produces a Boggle™ board containing them: a grid of letters, where you form words by bouncing between letters one at a time in any direction.

If it can be done at all, it can certainly be done multiple ways, since given one solution you can generate others by mirroring or rotation. (You might have multiple solutions that differ by more than that).

I don’t care which solution is picked; any valid solution is ok.

Finally, floating-point arithmetic is only an approximation of real-number arithmetic. Because of variations in computation, you might have answers that vary by some epsilon.

The first challenge with non-determinism is recognizing when it happens. When it does, you may have to specify when two answers are equivalent or equally acceptable.

Subjectivity

Some tests are well-defined but appeal to subjective standards.

For example, “7 of 10 people agree it’s fun.” Refine what you mean by “people” all you want – but “fun” is subjective.

Usability and other “non-functional” characteristics often have the same sort of challenge: “9 of 10 [suitable] users can complete the standard task in <5 minutes with 30 minutes of training.”

Subjective tests come with no guarantee of their feasibility. Furthermore, they can be extremely expensive to test. It is not wrong to have them, but you must recognize there’s no guarantee about how easily subjective standards can be met.

Research Project

It is possible to specify or define a test that is valid, but for which you don’t actually know how to solve it. (Testable? Yes! Risky? Very!)

That is: you can write tests for something you don’t know how to implement, and any estimate you make is suspect because you’re estimating a research project.

For example, consider the challenge of identifying pedestrians in a scene. Given an image, we could poll 1000 people to identify pedestrians with a high level of confidence. But we’re not clear on how people make that call – we suspect it can be done algorithmically, but proving that has been a multi-year research project. (Progress in self-driving cars suggests it is possible).

There’s nothing wrong with having a research project if that’s what you need and you accept the risk. Just don’t fool yourself into thinking it’s a SMOP – Simple Matter of Programmming – when it’s not.

Testable Trigger Words

Given all the testing challenges mentioned above, how do we make sure we’re writing good tests?

I’ve found there are trigger words that can warn you about potential problems in the tests or in the discussion around them:

- “Just” – such a little word, but it’s often used as a power move, to minimize the importance of concerns without actually addressing them. (“Just check each of these against all the others.”)

- “Appropriate,” “right,” “suitable” – of course you want the appropriate thing done – but that doesn’t define what “appropriate” actually means.

- “Best,” “worst” – saying “best” doesn’t define best.

- “Most,” “least,” “shortest,” “longest” – if you’re clear about what you want the most or least of, that helps, but these words can also hide computationally expensive choices.

- “All combinations,” “all permutations” – these words may signal things that are well-defined but computationally infeasible.

- “Any of,” “don’t care” – these phrases often occur with non-deterministic examples. They’re legitimate, but it can be tricky to specify test cases with such examples. (Will you list all possible solutions?)

- “Fun,” “easy to use,” “people,” “like“, … – these are common in non-functional attributes, or may hide a research project.

- “I’ll know it when I see it” – there’s no chance you’ll get this right in one round.

Summary

Testable stories help ensure that teams agree on what’s wanted. Tests support collaboration: they help clear up muddy thinking, and help people build a shared understanding.

We’ve looked at several challenges with tests:

- Troubles to fix: Magic, Intentional Fuzziness

- Challenges to be aware of: Computational Infeasibility, Non-Determinism

- Risks to not accept accidentally: Subjectivity, Research Projects

We closed with some trigger words that can indicate when you’re facing testing challenges.

This is the close of the series taking a more detailed look at the INVEST model. I’ve included links to the other articles below.