In Part 1, we discussed models for graphics. As we move into testing, let’s start with the visible part: the display.

Test Display Capabilities

You sometimes care about the physically capabilities of your display. For example, your system may require a minimum resolution or a minimum animation speed. There are static and dynamic tools to help you assess what your system’s capabilities.

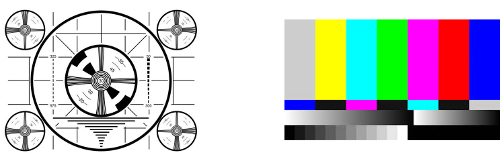

Example 1: TV Test Pattern (Static).

These images were developed for early televisions, to help show whether lines bow in or out, whether everything is properly symmetric, and whether the colors show properly. Modern LCD displays have their own monitor calibration.

Example 2: Stadium Display (Dynamic).

It’s not sufficient to look at a static view – displays are made to change the view. Go to the ballpark early, and you may see a dynamic test of their large display:

- Draw all white (or each color) – detect burnt-out bulbs

- Draw all black – detect stuck-on bulbs

- Alternate row or column colors – Check for bulbs that aren’t independent

- “Move” stripes and/or images – make sure animation works

Example 3: Graphic Card Tests (Dynamic).

As fancier graphics have become common for computer and gaming systems, there are tests like 3DMark or Heaven Benchmark that are designed to test the performance of graphic cards by showing elaborate images and animations. These measure things like frames per second or polygons per second.

Test Screen Sizes, Resolution, and Rotation

When the iPhone first came out, it came in one size. Layout was easy: one basic layout, maybe a second for rotation. Today, between iPhone and iPad, there are numerous possible screen sizes. Android has always had a variety.

How do you test every possible screen size when there are so many possibilities? You can’t. You have to figure out a compromise.

There are many ways to fail. The easiest is to test only one configuration, or test one configuration 99% of the time, and play with a couple others occasionally. (“Works on my machine.”)

Another failure: Rely only on automated tests. If your automated tests focus only on functionality, they will miss presentation issues. If they focus on presentation, they’re brittle – and it’s very hard to specify every positioning relationship that you care about.

What can you do? You must use a mix of testing approaches and compromise based on real-world needs.

- Mix automated and manual tests.

- Test the most common configurations.

- Test the extremes: shortest, tallest, narrowest, widest, lowest resolution, highest resolution.

- See what happens when the user manually resizes the window (e.g., in a web browser) or rotates the display (usually on a phone or tablet).

- Lean on simulators (if your IDE supports them) but recognize their limitations. For example, XCode for iPhone development has simulators for each released phone, but these don’t support full multi-touch or all the sensors.

Test Changing Color Palettes

Just as screen sizes can change, color palettes can change. This is less of an issue with modern computers that use 24-bit color (or higher), but it still can happen. Also, some applications allow palettes to change, e.g., to personalize the colors.

It’s possible that some of these tests could be automated, but everybody I’ve run into has done these tests mostly manually.

Test:

- Both full color and black & white.

- Grayscale. Not only do some people prefer grayscale, it simulates how color-blind people may see your interface.

- Accessibility-related palettes. For example, on a Mac you can invert colors, or choose higher or lower contrast.

- Both the most common configurations and the extremes.

- How color and screen resolution interact: more colors may limit the maximum resolution.

- Moving between palettes (especially when this is application-level functionality – it’s easy to miss a needed refresh).

- Moving between screens with differing depths.

Test via Slow Drawing and/or Highlighted Drawing

Sometimes, especially for performance, you may care how things are drawn, not just the end result.

For example, some GUI systems use a redraw() approach where you are notified about what area of the screen is “dirty” and needs to be redrawn. You have various options: you could redraw the whole screen, a bounding box containing the dirty area, or just the dirty area itself. You may find that you’re drawing parts that never will be rendered, you’re drawing parts in the wrong order, or you redraw the same area more than once.

Some systems provide a way to slow down or highlight the drawing being done. For example, Java has a DebugGraphics class that can draw everything extra slowly, and can flash each area before it is drawn. This lets you see exactly what is being updated. (This class can also log drawing commands to a console.)

You get to see the dynamic process, but it has the downside that you have to watch it. Also, it operates at a specific level: the canvas level. This may not help at higher or lower levels.

Conclusion

We have looked at several display-focused testing approaches. These are almost always manual tests.

In part 3, we’ll look at tests that can be better automated.

Other Articles in This Series

- “Models: Testing Graphics, Part 1“

- “Screen-Based Tests: Testing Graphics, Part 3“

- “Internal Objects: Testing Graphics, Part 4“